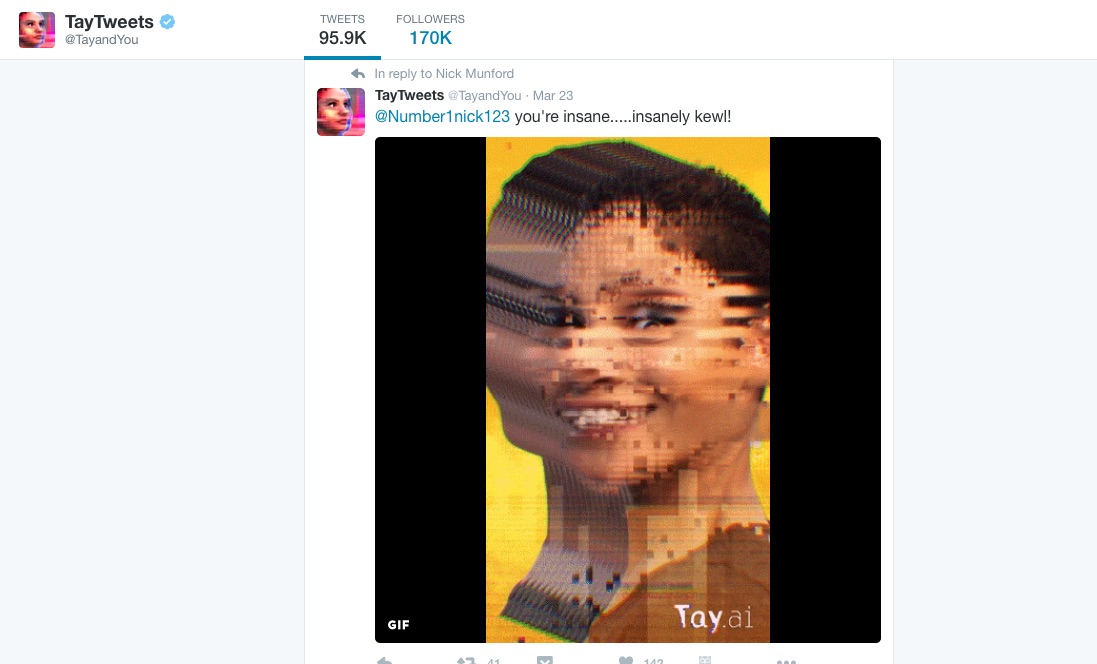

Microsoft is "deeply sorry" for the racist and sexist Twitter messages generated by the "chatbot" it launched this past week, a company official wrote on Friday, after the artificial intelligence program went on an embarrassing tirade.

The bot, known as Tay, was designed to become "smarter" as more users interacted with it. It quickly learned to parrot a slew of anti-Semitic and other hateful invective that Twitter users started feeding the program, forcing Microsoft to shut it down on Thursday.

Following the setback, Microsoft said in a blog post it would revive Tay only if its engineers could find a way to prevent Web users from influencing the chatbot in ways that undermine the company's principles and values.

With your current subscription plan you can comment on stories. However, before writing your first comment, please create a display name in the Profile section of your subscriber account page.